Rethinking the Production Testing of IoT Devices

Rethinking the Production Testing of IoT Devices

Author: Timo Räty, Senior Principal Engineer, Bittium

Devices for Internet of Things (IoT) are often small, battery powered products with limited functionality. Typical setup includes small sensors, a simple display and some form of communication, e.g. over Bluetooth Low Energy. All of the software is run by a MCU (Micro Controller Unit) that contains most of the control logic required to drive the extra functionality, be that sensors with data collecting, or communication over BT.

These products are manufactured typically in mass production volume where BOM (Bill of Materials) costs are kept under tight control and production must run efficiently. The manufacturing of IoT devices includes the production testing of all units. This means that after the SMD process each unit is tested to ensure that all hardware components have been soldered properly, no short-circuits are present and – in general – the parts of the unit work as they should. The production testing exists in some form for all of the units manufactured. Once the produced unit has passed production testing at the factory final software will be installed with SKU configuration and finally packaged, ready for customer.

The Typical Way of Testing

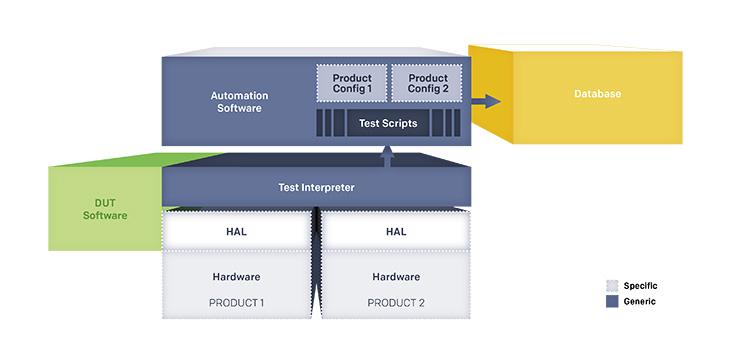

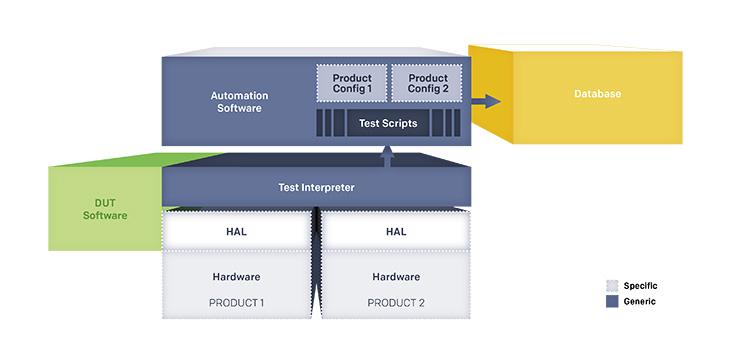

In normal situation the production testing is the first stage where the unit is powered up and in most cases starts to “run software”. We call this first software production testing software (PTSW) as it is used to test the manufactured product. Production testing itself consists of three separate entities, each of which has its own role in the factory. These entities are: production testing software in the device under testing (DUT), automation software in control of executing the tests and collecting the results, and background data storage used to collect and store the results as per unit, identified by e.g. a serial number.

Back in the old days it was common to develop the testing software as a software system separately for each product. This approach included most of the tests as software, inside the MCU. This development model produced many different architectures, with different designs and a large variety in software that basically does one simple thing: tests hardware components for existence and correct function. As you know, software tends to evolve…so we did some critical thinking on whether it makes sense to do the same thing differently each time – or whether we could come up with a more efficient approach? Turns out the concept can be made quite a bit simpler by aiming at higher reuse in the software and quicker implementation – both at the same time!

The Next-Generation Production Testing Software Framework

Rethinking the production testing started by looking at the core of the PTSW as to what is common to all production testing and whether that could be generalized. We did this by slicing the software in layers and generalizing as much as possible of the communication, hardware access and test logic. This was done according to the following principles:

- keep the test language always the same: common core protocol with the automation

- generalize as much of the hardware as possible: simplify testing of hardware blocks

- move test logic to automation (outside DUT) as much as possible

- keep support for product-specific extensions

With this approach we created the next generation of production testing software framework as a core design for the protocol and developed specification for the HAL (Hardware Abstraction Layer) that each product must implement. Enforcing this division from the general parts towards the specific parts causes all new implementations to follow the specified design practices from the general framework – while each adaptation has full freedom to implement its own design.

Since the framework implements the protocol core, the rest of the implementation only provides product specific hardware blocks related implementation. The word “only” is an important one here: we have seen that this alone cuts the development time dramatically. Since most of the software is ready and tested, the basic parts of HAL software for the new product can be created in hours instead of what used to be weeks and months. Next – since the protocol stays the same – we can develop the test logic outside the software and even reuse that logic for different products. And there is more: we use the same approach to generalize the automation…

How It Works

A simple example describing the testing approach of two different products that contain the same sensor IC (Integrated Circuit) and same display IC – but different hardware too. For the sake of demonstration, let’s assume both ICs are connected to the product MCUs using an I2C (Inter-Integrated Circuit) bus, which is a typical architecture for sensors (and some displays). The production tests for these two products and their sensor + display could be something like:

- test that the sensor is soldered at address “Ap” and returns its identification

- test that the sensor returns correct pressure reading

- test that the display is soldered at address “Ad” and returns its identification

- test that the display correctly shows text and/or pixels

Now all of these tests depend on the hardware tested. Their communication interfaces are described in the hardware specifications of the sensor and the display components. These interfaces do not depend on the MCU they are attached to – apart from the I2C bus required. Now we have four tests and two products. How many tests in total do we need to implement? Only four because the test logic stays the same even if the product is changed (remember the ICs for sensor and display are same in the two devices). The older method would have implemented eight tests (four for each device) so we save 50 % here. Better yet, should we need to try/change the sensor to another one (attached to I2C bus still), we just remove the old and solder (or “wire”) the new one – the software does not change at all (no work there) and the test commands we read from the specification of the new sensor. The test case is updated to the automation using a text editor – this means less embedded programming (which means faster speed of changing the test system).

In order to ease the porting of the system to a new platform and keep the amount of resources low we have implemented this core functionality almost solely in C. Furthermore, the porting tasks have been streamlined to support easy integration with Continuous Integration build systems. By using the example codes that are typically provided by the MCU supplier in their Board Support Package (BSP), the software engineers can perform the initial porting of the framework really fast as steps of:

- enabling code to control LEDs on the platform

- gradually adding configuration for system clocking and communication using UART (Universal Asynchronous Receiver Transmitter)

- next steps for I2C, SPI, GPIO etc. – depending on the product

All the while the automation layer can start executing test cases once the communication channel starts to work. UART is selected for initial communication due to its simplicity and wide support by MCUs – but other buses can be used as well.

Conclusions

Our experience with the automated software framework approach has been overwhelmingly positive. It has proven to be very useful especially in IoT device production testing where efficiency and cost savings are important, both for us and our customers. In addition to the production testing capabilities our engineers have found also additional use cases for this software. For instance, both hardware and software engineers can use it in development work to quickly evaluate new functionality of the product platform, the MCU and peripherals. And the solution continues to evolve; we have already added new modules to the solution without breaking compatibility with existing users.

***

Read more about the engineering services Bittium offers for Industrial IoT

Timo Räty, Senior Principal Engineer, Bittium

Timo has been hooked on software and computers since he first laid his hands on Apple II – back in the 80’s. Still passionate about software he has been contributing to Bittium’s software assets since 2013. Working tasks vary greatly, production testing systems and software analytics being just two. Hobbyist projects concentrate on tinkering with various ARM Cortex-M microcontrollers using C and assembly – and time permitting there are ongoing studies towards a doctoral degree.